The following is an Editorial Opinion.

A few months ago, I was honored to have the opportunity to speak with Camille Oren, who with Andrej Verity co-authored a paper published today on Digital Humanitarians titled Artificial Intelligence (AI) Applied to Unmanned Aerial Vehicles and its Impact on Humanitarian Action. I was in good company: other drone journalists, DJI‘s Romeo Durscher (one of the originators of the “Drones for Good” movement) and digital humanitarian Patrick Meier were among those interviewed.

The paper – nearly 50 pages of information on artificial intelligence technologies as applied to drones for good – is well worth reading. It’s a thoughtful and thought-provoking piece.

I must respectfully disagree with the conclusion, however.

While I hope that every reader will inform themselves, in these times of seemingly unending disasters, it is my considered opinion that drone programs should be implemented without delay in humanitarian programs. It is true that human operators of AI-powered drones, cars, guns, cell phones and a multitude of other tools may use these in ways that violate human rights. However, to broadly limit the use of the tool itself -a tool already in common use – is to limit the good that humanitarians are able to do; and in some cases, to allow needless loss of life.

Do AI-Powered Drones Follow the “Do No Harm” Principle?

The authors are not anti-drone, but they do conclude that AI-powered drones currently represent too much risk to privacy and human dignity to be used in a humanitarian context until they have been more exhaustively tested.

All the benefits of using drones and AI should be weighed against the risks. This depends on value-judgments and value-weighing which require a general and situational analysis. For example, a general analysis underlying the importance of human dignity would lead to a general proscription of autonomous drones while a contextual analysis may authorize drones in certain cases,” says the paper. “Ethical innovation must be based on the “do no harm” principle meaning that “risk analysis and mitigation must be used to prevent unintentional harm, including from primary and secondary effects relating to privacy and data security”.. This is why risks must be investigated, analyzed and minimized leading to a potential rejection of the technology if it is ethically wrong.”

…If risks are underanalyzed and therefore unknown, humanitarians should consider a general rejection of autonomous drones with potential exceptions.”

There is risk of misuse of drones, as there is risk of the misuse of any technology. Some of the examples used are indeed disturbing and thought provoking, and they represent a very legitimate concern:

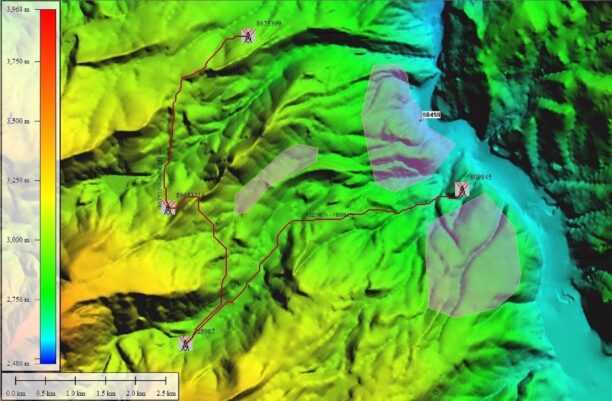

The risk of mass surveillance with drones has often been highlighted as the main ethical issue. When shared with governments, data can be used for domestic surveillance. For example, having really accurate information on building types and damage assessment is useful for humanitarians to improve disaster risk reduction. But this kind of data can also be used by governments to collect information on illegal settlements or tax dodging.13 3In some instances, data collected on vulnerable populations could put their lives at risk.

A relevant example would be the potential risks associated with publishing drone imagery, taken by IOM, of Cox’s Bazar Rohingya Refugees Settlements in Bangladesh on OpenStreetMap. While these images have proven to be useful for humanitarian purposes, they give information on a population displaced by genocide issuing security threats.

Are the Risks Specific to UAVs?

That aerial data creates risks to privacy is undoubtedly true. Certainly, as the authors point out, drone operators working in the humanitarian field must be sensitive about the issues and there must be protocols in place to protect the data – as there are for other sources of imagery.

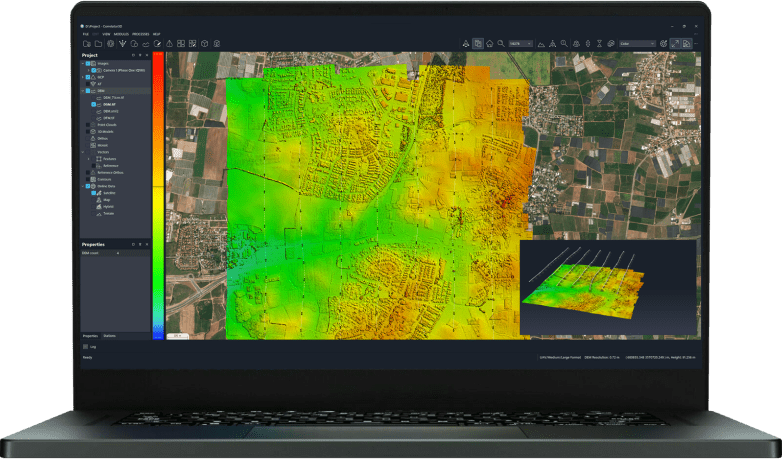

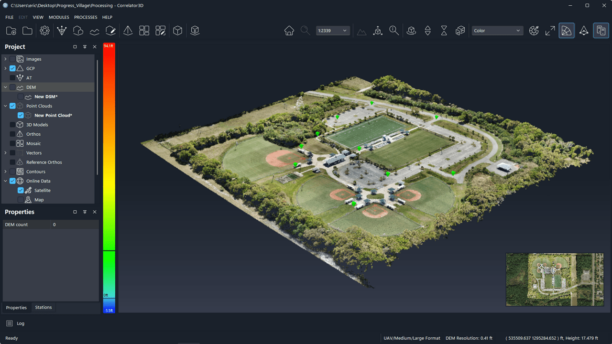

However, aerial data or open mapping data can be gathered by many means: the risk is not limited to UAVs. In addition, that particular cat, to use an idiom, is already out of the bag. You cannot stop the rest of the world from using drones to gather imagery, so should humanitarians limit their use? The same aerial imagery that presents a potential risk to privacy may also be invaluable for providing truth about sensitive situations in a world beset by misinformation. Access to mapping in communities can provide access to resources and protection from disaster. Aerial data may allow faster and more effective building of critical infrastructure, or documentation of natural events.

What About Other Use Cases?

Issues of privacy and human dignity are unarguably critical. To delay the adoption of drone technology and the training of staff in UAV operations while these very important issues are being evaluated, however, is to delay the use of drones in many less controversial and valuable applications. Ultimately, the drone industry has to work together to promote the safe and responsible use of drones, on worksites and especially in a humanitarian context – because there are so many cases in which only drones can provide life saving humanitarian aid. In search and rescue, the delivery of emergency supplies in the absence of road infrastructure, finding people in collapsed buildings and places that human aid cannot reach, reinstating communication networks, and in countless other situations; only drones can save lives – if we let them.

Miriam McNabb is the Editor-in-Chief of DRONELIFE and CEO of JobForDrones, a professional drone services marketplace, and a fascinated observer of the emerging drone industry and the regulatory environment for drones. Miriam has penned over 3,000 articles focused on the commercial drone space and is an international speaker and recognized figure in the industry. Miriam has a degree from the University of Chicago and over 20 years of experience in high tech sales and marketing for new technologies.

For drone industry consulting or writing, Email Miriam.

TWITTER:@spaldingbarker

Subscribe to DroneLife here.

[…] Find The Source Here. […]