Perhaps one of the most inspiring Drones for Good stories we’ve heard in the last few years is that of Pedro Cruz, a Puerto Rican native who responded to the devastation of 2017’s Hurricane Maria by creating DroneAid, a tool designed to communicate SOS signs on the ground to first responders, during a Call for Code Hackathon. Now a full-time IBM developer, Pedro Cruz is bringing DroneAid to the open source community – making it available to as many people as possible.

Perhaps one of the most inspiring Drones for Good stories we’ve heard in the last few years is that of Pedro Cruz, a Puerto Rican native who responded to the devastation of 2017’s Hurricane Maria by creating DroneAid, a tool designed to communicate SOS signs on the ground to first responders, during a Call for Code Hackathon. Now a full-time IBM developer, Pedro Cruz is bringing DroneAid to the open source community – making it available to as many people as possible.

The following is a blog post written by Pedro Cruz and republished with permission. Images courtesy IBM.

A developer’s journey from attending a Call for Code hackathon to open sourcing drone tech as one of Code and Response’s first projects

By Pedro Cruz, IBM Developer Advocate and Founder of DroneAid

On September 20, 2017, Hurricane Maria struck my home, Puerto Rico. After surviving the record-breaking Category 5 storm and being personally affected by its aftermath, I decided I was going to make it my mission to create technology that could help mitigate the impact hurricanes have on our island.

Inspired by Call for Code

Can you imagine trying to plan relief efforts for more than three million people? People in rural areas, including a community in Humacao, Puerto Rico, suffered the most. The people in this community were frustrated that help was promised but never came. So, the community came together and painted “water” and “food” on the ground as an SOS, in hope that helicopters and planes would see their message. For me, it was sad and frustrating to see that the reality outside of the metro area was different. Lives were at risk.

Can you imagine trying to plan relief efforts for more than three million people? People in rural areas, including a community in Humacao, Puerto Rico, suffered the most. The people in this community were frustrated that help was promised but never came. So, the community came together and painted “water” and “food” on the ground as an SOS, in hope that helicopters and planes would see their message. For me, it was sad and frustrating to see that the reality outside of the metro area was different. Lives were at risk.

Fast-forward to August 2018. Less than a year after the hurricane hit, I attended the Call for Code Puerto Rico Hackathon in Bayamón, Puerto Rico. I was intrigued by this global challenge that asks developers to create sustainable solutions to help communities prepare for, respond to, and recover from natural disasters.

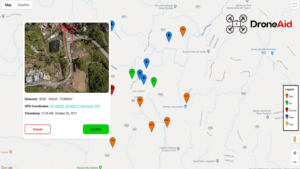

The SOS messages after the Hurricane inspired me to develop DroneAid, a tool that uses visual recognition to detect and count SOS icons on the ground from drone video streams overhead, and then automatically plots the emergency needs captured via video on a map for first responders. I thought that drones could be the perfect solution for rapidly assessing damages from the air and they could help with capturing images that could then be processed by AI computer vision systems. At first, I thought of using OCR (optical character recognition) technologies to detect letters. The problem with this approach is that everyone has different handwriting. If we want this to work in other languages, it will be very complex.

The SOS messages after the Hurricane inspired me to develop DroneAid, a tool that uses visual recognition to detect and count SOS icons on the ground from drone video streams overhead, and then automatically plots the emergency needs captured via video on a map for first responders. I thought that drones could be the perfect solution for rapidly assessing damages from the air and they could help with capturing images that could then be processed by AI computer vision systems. At first, I thought of using OCR (optical character recognition) technologies to detect letters. The problem with this approach is that everyone has different handwriting. If we want this to work in other languages, it will be very complex.

After a few hours of coding, I pivoted and decided to simplify the visual recognition to work with a standard set of icons. These icons could be drawn with spray paint, chalk, or even placed on mats. Drones could detect those icons and communicate to first responders on a community’s specific needs for food, water, and medicine. I coded the first iteration of DroneAid at that hackathon and won first place. This achievement pushed me to keep going. In fact, I joined IBM as a full-time developer advocate.

DroneAid is so much more than a piece of throwaway code from a hackathon. It’s evolved into an open source project that I am excited to announce today. I’m thrilled that IBM is committed to applying our solution through Code and Response, the company’s unique $25 million program dedicated to the creation and deployment of solutions powered by open source technology to tackle the world’s biggest challenges.

Open sourcing DroneAid through Code and Response

DroneAid leverages a subset of standardized icons released by the United Nations. These symbols can either be provided in a disaster preparedness kit ahead of time or recreated manually with materials someone may have on hand. A drone can survey an area for these icons placed on the ground by individuals, families, or communities to indicate various needs. As DroneAid detects and counts these images, they are plotted on a map in a web dashboard. This information is then used to prioritize the response of local authorities or organizations that can provide help.

DroneAid leverages a subset of standardized icons released by the United Nations. These symbols can either be provided in a disaster preparedness kit ahead of time or recreated manually with materials someone may have on hand. A drone can survey an area for these icons placed on the ground by individuals, families, or communities to indicate various needs. As DroneAid detects and counts these images, they are plotted on a map in a web dashboard. This information is then used to prioritize the response of local authorities or organizations that can provide help.

From a technical point of view, that means that a visual recognition AI model is trained on the standardized icons so that it knows how to detect them in a variety of conditions (i.e. whether they are distorted, faded, or in low light conditions). IBM’s cloud annotations tool makes it straightforward to train AI using IBM Cloud Object Storage. This model is applied to a live stream of images coming from the drone as it surveys the area. Each video frame is analyzed to see if any images exist. If they are, their location is captured and they are counted. Finally, this information is plotted on a map indicating the location and number of people in need.

The system can be run locally by following the steps in the source code repository, starting with a simple Tello drone example. Any drone that can capture a video stream can be used since the machine learning model leverages Tensorflow.js in the browser. This way we can capture the stream from any drone and apply inference to that stream. This architecture can then be applied to larger drones, different visual recognition types, and additional alerting systems.

Calling all developers to collaborate in the DroneAid open source community

It’s been quite a journey so far and I feel like we’re just getting started. Let’s unite to help reduce loss of life, get victims what they need in a timely manner and help reduce the overall effects a natural disaster will have on a community.

Our team decided to open source DroneAid because I feel it’s important to make this technology available to as many people as possible. The standardized icon approach can be used around the world in many natural disaster scenarios (i.e., hurricanes, tsunamis, earthquakes, and wildfires) and having developers contribute by training the software on an ongoing basis can help increase our efficiency and expand how the symbols can be used together. We built the foundation for developers to create new applications and envision using this technology to deploy and control a fleet of drones as soon as a natural disaster hits.

Now that you understand how DroneAid can be applied to help communities in need, join us and contribute here: https://github.com/code-and-

Miriam McNabb is the Editor-in-Chief of DRONELIFE and CEO of JobForDrones, a professional drone services marketplace, and a fascinated observer of the emerging drone industry and the regulatory environment for drones. Miriam has penned over 3,000 articles focused on the commercial drone space and is an international speaker and recognized figure in the industry. Miriam has a degree from the University of Chicago and over 20 years of experience in high tech sales and marketing for new technologies.

For drone industry consulting or writing, Email Miriam.

TWITTER:@spaldingbarker

Subscribe to DroneLife here.

[…] the devastating aftermath of Hurricane Maria in 2017, Puerto Rican coder Pedro Cruz developed DroneAid. The relief solution identifies SOS messages survivors create on the ground in the hope they will […]