The Aerial Informatics and Robotics platform solves for these two problems: the large data needs for training, and the ability to debug in a simulator. It will provide realistic simulation tools for designers and developers to seamlessly generate the copious amounts of training data they need. In addition, the platform leverages recent advances in physics and perception computation to create accurate, real-world simulations. Together, this realism, based on efficiently generated ground truth data, enables the study and execution of complex missions that might be time-consuming and/or risky in the real-world. For example, collisions in a simulator cost virtually nothing, yet provide actionable information for improving the design.

A toolbox for rapid prototyping, testing, and deployment

Building a data-driven robotic system such as the Aerial Informatics and Robotics platform is full of challenges. First, it needs to support a wide variety of software and hardware. Second, given the break-neck speed of innovation in hardware, software, and algorithms, it must be flexible enough to be easily extended in multiple dimensions. The Aerial Informatics and Robotics framework follows a modular design to address these challenges.

The platform enables easy interfaces to common robotic platforms such as a Robot Operating System (ROS) and comes pre-loaded with commonly used aerial robotic models and several sensors. In addition, the platform enables high-frequency simulations with support for hardware-in-the-loop (HIL) as well as software-in-the-loop (SIL) simulations with widely supported protocols (e.g. MavLink). Its cross-platform (Linux and Windows), open-source architecture is easily extensible to accommodate diverse new types of autonomous vehicles, hardware platforms, and software protocols. All this machinery allows users to quickly add custom robot models and new sensors to the simulator.

The platform is also designed to integrate with existing machine learning frameworks to generate new algorithms for perception and control tasks. Methods such as reinforcement and imitation learning, learning-by-demonstration, and transfer learning can leverage simulations and synthetically generated experiences to build realistic models.

Aerial robots: from perception to safe control

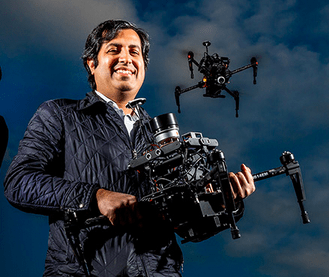

Quadrotors are the first vehicles to have been implemented in the platform. These aerial robots have application in precision agriculture, pathogen surveillance, weather monitoring and more. A camera is an integral part of these systems and often the only way for a quadrotor to perceive the world to plan and execute its mission safely.

The platform enables seamless training and testing of such perception systems as cameras via realistic renderings of the environment. These synthetically generated graphic images can generate orders of magnitude more perception and control data than is possible with real-world robot data alone.

This open-source, high-fidelity physics and photo-realistic robot simulator can help verify control and perception software, so that robot designers and developers can transfer their creations to the real world with the fewest possible changes.

Try it now

Frank Schroth is editor in chief of DroneLife, the authoritative source for news and analysis on the drone industry: it’s people, products, trends, and events.

Email Frank

TWITTER:@fschroth